OpenAI just dropped Agent Builder last week, and it's quietly changing how we think about web scraping. Instead of wrestling with Selenium selectors that break every time a website updates, or writing 200 lines of Playwright code just to bypass a CAPTCHA, you can now build browser agents that actually understand what they're looking at.

I've spent the last few days testing Agent Builder's web scraping capabilities, and here's what I've learned: it works surprisingly well for certain tasks, has some annoying limitations, and opens up possibilities that traditional scrapers can't touch. Let me show you how to use it.

What is OpenAI Agent Builder?

Agent Builder is OpenAI's new visual workflow tool that lets you create AI agents without writing code. Think Zapier meets browser automation, but with GPT-5's vision capabilities built in.

It's part of AgentKit, OpenAI's suite for building, deploying, and optimizing agents, launched at DevDay 2025 on October 6th. The platform includes a drag-and-drop canvas where you connect nodes, add logic, configure tools, and set guardrails—all without touching a terminal.

But here's the interesting part for web scraping: Agent Builder has access to the Computer Use tool, which lets agents interact with browsers by actually seeing the screen and taking actions like clicking, typing, and scrolling—just like a human would.

Why Use Agent Builder for Web Scraping?

Traditional web scraping hits a wall when websites get complex. You've probably experienced this:

- A site redesigns their layout and your CSS selectors break

- Dynamic content loads via JavaScript, requiring headless browsers

- Anti-bot systems block your scraper after three requests

- You need to navigate multi-step workflows (login, search, filter, extract)

- The data you want isn't in the HTML—it's in a table rendered as an image

Agent Builder solves these problems differently. The Computer-Using Agent (CUA) model combines GPT-4o's vision capabilities with reinforcement learning, trained to interact with graphical user interfaces the same way humans do. It doesn't care if the HTML changes—it's looking at pixels, not DOM nodes.

When Agent Builder shines:

- Sites with frequent layout changes

- Interactive workflows requiring human-like navigation

- Pages protected by CAPTCHAs or bot detection

- Data extraction from images, PDFs, or non-standard formats

- Tasks where you'd normally hire a VA to manually collect data

When you should stick with traditional scrapers:

- Simple, static pages with clean HTML

- High-volume scraping (Agent Builder has usage limits)

- When you need millisecond-level performance

- Budget-conscious projects (LLM calls add up)

Getting Started with Agent Builder

Prerequisites

Before building your first scraping agent, you'll need:

- An OpenAI account with API access

- Agent Builder beta access (currently rolling out)

- Basic understanding of web scraping concepts

- Your scraping target identified

Agent Builder is currently in beta, while other AgentKit features like ChatKit are generally available. You can request access through the OpenAI platform.

Two Approaches: Visual vs. Code

Agent Builder offers two ways to build scraping agents:

Visual-first approach (Agent Builder UI): Drag-and-drop nodes, versioning, and guardrails on a visual canvas. Use templates or start from a blank canvas.

Code-first approach (Agents SDK): Build agents in Node, Python, or Go with a type-safe library that's 4× faster than manual prompt-and-tool setups.

For web scraping, I recommend starting with the Agents SDK. You get more control, can version your code properly, and it's easier to debug when things go wrong (and they will).

Building Your First Web Scraping Agent

Let's build an agent that scrapes product information from an e-commerce site. We'll use the Agents SDK with Python—the fastest way to get something working.

Step 1: Environment Setup

First, create a project directory and set up a virtual environment:

mkdir web-scraper-agent

cd web-scraper-agent

python3 -m venv .venv

source .venv/bin/activate # On Windows: .venv\Scripts\activate

Install the required dependencies:

pip install openai-agents playwright requests markdownify python-dotenv

playwright install chromium

Create a .env file for your API key:

OPENAI_API_KEY=your_api_key_here

Why these dependencies? The openai-agents package is the official Agents SDK. Playwright handles browser automation. markdownify converts HTML to a cleaner format that costs less to process with LLMs (important for staying under token limits).

Step 2: Create a Basic Computer Use Agent

Here's the skeleton of a web scraping agent. Save this as scraper_agent.py:

import os

from dotenv import load_dotenv

from agents import Agent, Runner, ComputerTool

from agents.models import OpenAIResponsesModel

load_dotenv()

# Initialize the model with computer use capabilities

model = OpenAIResponsesModel(

model="computer-use-preview-2025-03-11",

api_key=os.getenv("OPENAI_API_KEY")

)

# Define the scraping agent

scraping_agent = Agent(

name="Web Scraper",

model=model,

instructions="""You are a web scraping specialist. Your job is to:

1. Navigate to the specified URL

2. Locate the requested information on the page

3. Extract and structure the data accurately

4. Handle any popups or cookie banners

Be methodical and verify you're extracting the correct elements.""",

tools=[ComputerTool()]

)

# Run the agent

async def scrape_product_data(url: str, data_points: list):

runner = Runner()

task = f"""

Navigate to {url} and extract the following information:

{', '.join(data_points)}

Return the data in a structured format.

"""

result = await runner.run(scraping_agent, task)

return result

# Example usage

if __name__ == "__main__":

import asyncio

url = "https://example-shop.com/product/123"

data_points = ["product name", "price", "availability"]

result = asyncio.run(scrape_product_data(url, data_points))

print(result)

This creates an agent with access to the ComputerTool, which allows automating computer use tasks through browser interaction.

What's happening here? The agent receives a task, uses the ComputerTool to launch a browser, navigates to the URL, and extracts the requested data by actually viewing the page and interacting with it.

Step 3: Add Custom Tools for Data Processing

The Computer Use tool handles navigation, but you'll often want to add custom tools for data processing. Here's how to create a tool that cleans and structures the extracted data:

from agents import function_tool

import json

import re

@function_tool

def clean_price_data(raw_price: str) -> dict:

"""

Extracts numeric price from various formats like '$19.99', '19,99 €', etc.

Returns structured price data with currency.

"""

# Remove common price prefixes/suffixes

cleaned = re.sub(r'[^\d.,€$£¥]', '', raw_price)

# Extract numeric value

numeric = re.search(r'[\d.,]+', cleaned)

price_value = float(numeric.group().replace(',', '.')) if numeric else 0.0

# Detect currency

currency_symbols = {'$': 'USD', '€': 'EUR', '£': 'GBP', '¥': 'JPY'}

currency = next((currency_symbols[s] for s in raw_price if s in currency_symbols), 'USD')

return {

"value": price_value,

"currency": currency,

"original": raw_price

}

@function_tool

def structure_product_data(name: str, price: str, stock: str) -> dict:

"""

Structures product data into a consistent format.

"""

return {

"product_name": name.strip(),

"price": clean_price_data(price),

"in_stock": "in stock" in stock.lower() or "available" in stock.lower(),

"raw_stock_text": stock

}

Now update your agent to use these tools:

scraping_agent = Agent(

name="Web Scraper",

model=model,

instructions="""You are a web scraping specialist. After extracting data:

1. Use clean_price_data() to process any price information

2. Use structure_product_data() to format the final output

Always validate the data before returning it.""",

tools=[

ComputerTool(),

clean_price_data,

structure_product_data

]

)

Why separate tools? Breaking functionality into discrete tools makes your agent more reliable. The Computer Use tool handles navigation, while custom tools handle data processing. This separation also makes debugging much easier.

Handling Complex Scraping Scenarios

Real-world scraping is messy. Here's how to handle common challenges:

Dealing with Popups and Cookie Banners

Add specific instructions to your agent's prompt:

instructions="""Before starting extraction:

1. Look for and dismiss any cookie consent banners

2. Close any promotional popups

3. Wait for the page to fully load (look for loading indicators)

Common cookie banner buttons: 'Accept', 'Accept All', 'I Agree', 'OK'

If a banner blocks the content, you MUST dismiss it first."""

Scraping Multiple Pages

For multi-page scraping, create a workflow that combines navigation and extraction:

@function_tool

def scrape_product_list(base_url: str, num_pages: int) -> list:

"""

Scrapes products from multiple pages of a listing.

"""

all_products = []

for page in range(1, num_pages + 1):

url = f"{base_url}?page={page}"

task = f"Go to {url} and extract all product names and prices visible on the page"

# Run agent for this page

products = asyncio.run(runner.run(scraping_agent, task))

all_products.extend(products)

return all_products

Bypassing Anti-Bot Measures

This is where Agent Builder's vision capabilities shine. The CUA model can see screenshots and interact with the browser like a human, making it harder for sites to detect as a bot.

However, you should still:

- Add delays between actions: Humans don't click instantly

- Randomize interaction patterns: Don't follow the exact same path every time

- Use residential proxies: For high-value scraping targets (requires additional setup)

- Respect robots.txt: Even if you can bypass it

Here's how to add natural delays:

import random

import time

@function_tool

def human_like_delay():

"""Adds a random delay to mimic human behavior."""

time.sleep(random.uniform(1.0, 3.0))

# Add to your agent's workflow

scraping_agent = Agent(

name="Web Scraper",

instructions="""After each page load, call human_like_delay()

before interacting with elements. This makes your behavior more natural.""",

tools=[ComputerTool(), human_like_delay, ...]

)

Using Agent Builder's Visual Interface

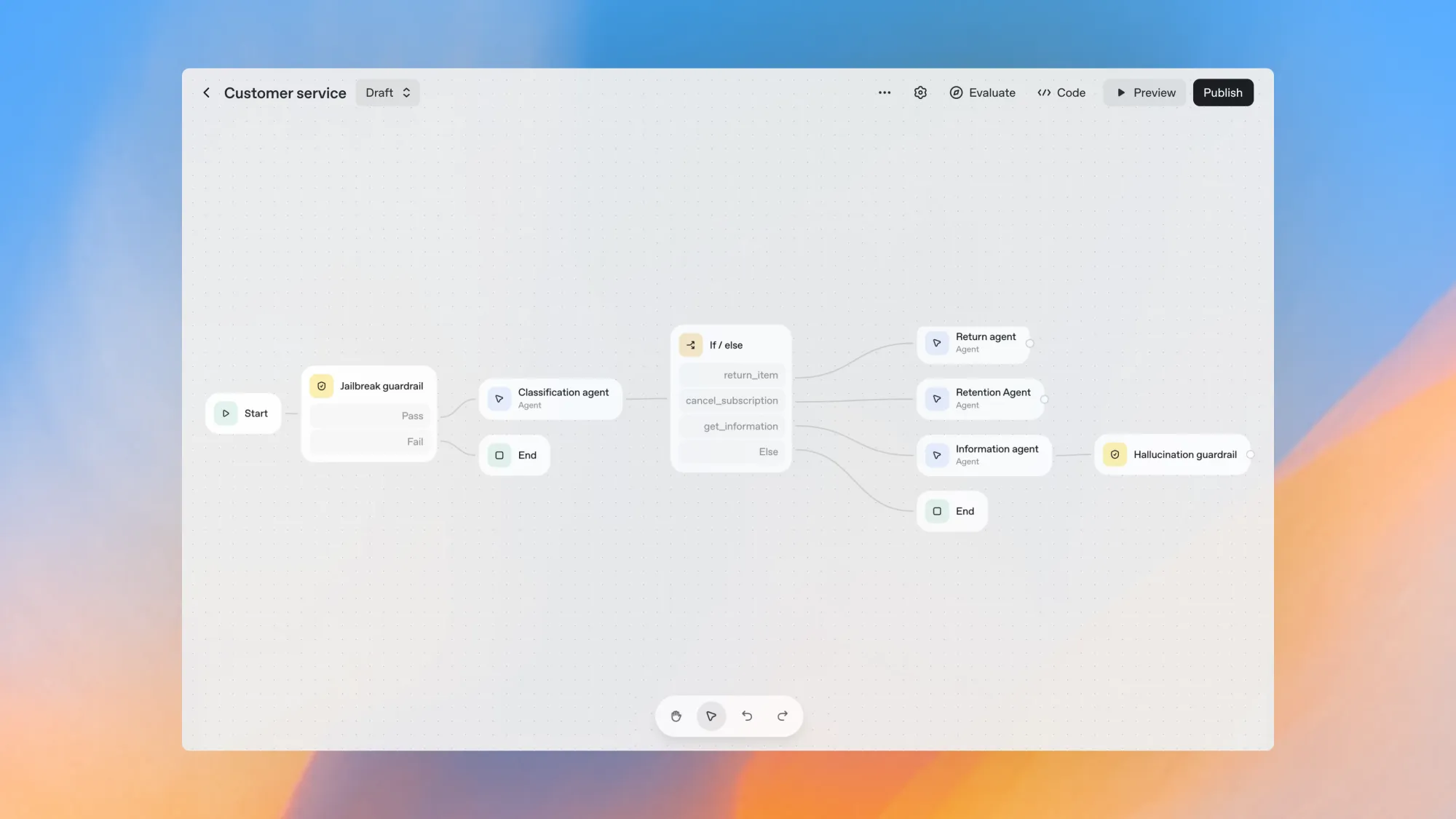

If you prefer a no-code approach, Agent Builder provides a visual canvas for composing logic with drag-and-drop nodes, connecting tools, and configuring custom guardrails.

Here's how to build a scraping workflow visually:

- Start node: Define your input (target URL, data to extract)

- Computer Use node: Navigate to the URL and capture page state

- Logic node: Check if the page loaded successfully

- Extraction node: Pull specific data using vision-based element detection

- Output node: Format and return the structured data

Templates to try: Agent Builder includes predefined templates like data enrichment routines and Q&A agents that you can adapt for scraping tasks.

The visual builder is great for:

- Rapid prototyping without code

- Collaborating with non-technical team members

- Creating reusable scraping templates

It falls short when you need:

- Complex data transformation logic

- Integration with existing Python codebases

- Version control through Git

Real-World Use Cases

Here are scenarios where Agent Builder excels at web scraping:

1. Competitive Price Monitoring

Track competitor prices across multiple e-commerce platforms, even when they use different layouts or anti-scraping measures:

competitor_monitor = Agent(

name="Price Monitor",

instructions="""Monitor prices for specific products across competitor websites.

For each site:

1. Search for the product by name

2. Navigate to the product page

3. Extract current price and stock status

4. Note any active promotions or discounts""",

tools=[ComputerTool(), clean_price_data]

)

2. Job Posting Aggregation

Collect job listings from sites without APIs, handling various formats and pagination:

job_scraper = Agent(

name="Job Aggregator",

instructions="""Collect job postings that match specific criteria.

Extract: title, company, location, salary, description, apply link.

Handle pagination until you reach listings older than 7 days.""",

tools=[ComputerTool(), structure_job_data]

)

3. Research Data Collection

Gather academic papers, citations, or research data from multiple sources:

research_agent = Agent(

name="Research Collector",

instructions="""For each paper title provided:

1. Search on Google Scholar

2. Extract citation count, authors, year

3. Download the PDF if available

4. Extract abstract and key findings""",

tools=[ComputerTool(), WebSearchTool()]

)

Limitations and Workarounds

Agent Builder isn't perfect for web scraping. Here are the main limitations I've encountered:

1. Cost

Every browser interaction consumes tokens. For high-volume scraping, this adds up quickly.

Workaround: Use Agent Builder for difficult sites, traditional scrapers for simple ones. Hybrid approach works best.

2. Speed

ChatGPT agent can be very slow—if you want a quick answer, you're better off doing the research yourself. The same applies to Agent Builder's scraping.

Workaround: Use Agent Builder for exploration and prototyping, then convert successful workflows to faster traditional scrapers for production.

3. Reliability

The agent might interpret visual elements differently than expected, especially on edge cases.

Workaround: Add validation tools that verify extracted data matches expected formats:

@function_tool

def validate_product_data(data: dict) -> bool:

"""Validates scraped data meets quality requirements."""

required_fields = ['product_name', 'price', 'in_stock']

# Check all fields present

if not all(field in data for field in required_fields):

return False

# Validate price is reasonable

if data['price']['value'] <= 0 or data['price']['value'] > 10000:

return False

return True

4. Authentication Required

If a site requires login, you'll need to handle credentials carefully.

Workaround: Use environment variables and have the agent take over the browser for authentication:

scraping_agent = Agent(

instructions="""When prompted to log in:

1. Enter username from environment

2. Enter password from environment

3. Click login button

4. Wait for successful authentication

5. Proceed with scraping task

Store cookies to avoid repeated logins.""",

tools=[ComputerTool()]

)

Advanced Techniques

Combining Agent Builder with Traditional Tools

The most powerful approach combines Agent Builder's intelligence with traditional scraping speed:

def hybrid_scrape(url: str):

"""Uses Agent Builder for navigation, BeautifulSoup for extraction."""

# Use agent to navigate and handle dynamic content

agent_task = f"Navigate to {url} and wait for all content to load"

page_html = asyncio.run(runner.run(navigation_agent, agent_task))

# Use traditional parsing for fast data extraction

from bs4 import BeautifulSoup

soup = BeautifulSoup(page_html, 'html.parser')

products = soup.find_all('div', class_='product-card')

return [extract_product_data(p) for p in products]

Monitoring and Alerting

Set up agents that run on schedule and alert you to changes:

@function_tool

def check_price_drop(product_url: str, threshold: float) -> dict:

"""Monitors product price and alerts on significant drops."""

current_price = scrape_current_price(product_url)

if current_price < threshold:

return {

"alert": True,

"message": f"Price dropped to {current_price}!",

"url": product_url

}

return {"alert": False}

Handling Rate Limits

Implement exponential backoff when you hit rate limits:

import time

from functools import wraps

def retry_with_backoff(max_attempts=3):

def decorator(func):

@wraps(func)

async def wrapper(*args, **kwargs):

for attempt in range(max_attempts):

try:

return await func(*args, **kwargs)

except Exception as e:

if attempt == max_attempts - 1:

raise

wait_time = 2 ** attempt

print(f"Attempt {attempt + 1} failed, waiting {wait_time}s...")

time.sleep(wait_time)

return wrapper

return decorator

@retry_with_backoff(max_attempts=5)

async def scrape_with_retry(url: str):

return await runner.run(scraping_agent, f"Scrape data from {url}")

Best Practices

After testing Agent Builder extensively for web scraping, here's what works:

- Start simple: Build agents for single pages before tackling complex workflows

- Use specific instructions: Vague prompts like "scrape the data" fail. Be explicit about what to extract and how to handle errors

- Add validation layers: Never trust extracted data without verification

- Monitor token usage: Track API costs—they can surprise you

- Version your agents: Agent Builder supports full versioning, ideal for fast iteration

- Test on multiple examples: Agents that work on one page might fail on slight variations

- Implement fallbacks: Always have a backup plan when the agent fails

- Respect ethical boundaries: Just because you can scrape something doesn't mean you should

Wrapping Up

OpenAI's Agent Builder changes the web scraping game by making vision-based extraction accessible to developers. You're no longer limited to parsing HTML—your scrapers can now see and interact with pages like humans do.

The sweet spot? Use Agent Builder for complex, dynamic sites where traditional scrapers struggle. For simple, structured data, stick with BeautifulSoup or Playwright. For production systems, consider a hybrid approach that leverages both.

Agent Builder is still in beta, with more features coming, including better deployment options and workflow APIs. But even in its current state, it's already useful for web scraping tasks that were previously too difficult or expensive to automate.

The biggest limitation isn't technical—it's cost. Every browser interaction runs through a vision model, and those tokens add up. But for medium-volume scraping of difficult sites? Agent Builder is absolutely worth trying.

What's next? Start with the Agents SDK, build a simple scraper for a site you already know, and see how the agent handles it. You'll quickly learn where it excels and where it falls short. Then you can make informed decisions about when to use it in production.

The future of web scraping isn't just about parsing HTML faster—it's about building agents that can adapt to any site, regardless of structure. Agent Builder is our first real taste of that future.

![IPRoyal vs Oxylabs: Which provider is better? [2026]](https://cdn.roundproxies.com/blog-images/2026/02/iproyal-vs-oxylabs-1.png)