n8n's low-code workflow automation combined with Roundproxies' rotating IP infrastructure lets you build enterprise-grade scrapers without drowning in boilerplate code. This guide shows you how to build a smart proxy rotation system that actually survives at scale, complete with health monitoring and automatic failover mechanisms.

What Makes This Setup Different

Most n8n scraping tutorials stop at basic HTTP requests. We're going deeper - building a proxy management system that:

- Rotates IPs intelligently based on success rates

- Monitors proxy health in real-time

- Automatically retries failed requests through different proxies

- Bypasses rate limits without manual intervention

Prerequisites and Initial Setup

Before diving into the workflow, you'll need:

- An n8n instance (self-hosted or cloud)

- A Roundproxies account with residential or ISP proxies

- Basic understanding of HTTP requests and JSON

Setting Up Your Roundproxies Account

Roundproxies offers two proxy types that work perfectly with n8n:

Residential Proxies: Best for scraping heavily protected sites. They rotate automatically with each request and provide IPs from real devices.

ISP Proxies: Faster and more stable, ideal for high-volume scraping of moderately protected sites.

After signing up, grab your proxy credentials from the dashboard. You'll get:

- Proxy endpoint:

proxy.roundproxies.com:8080 - Username: Your account username

- Password: Your proxy password

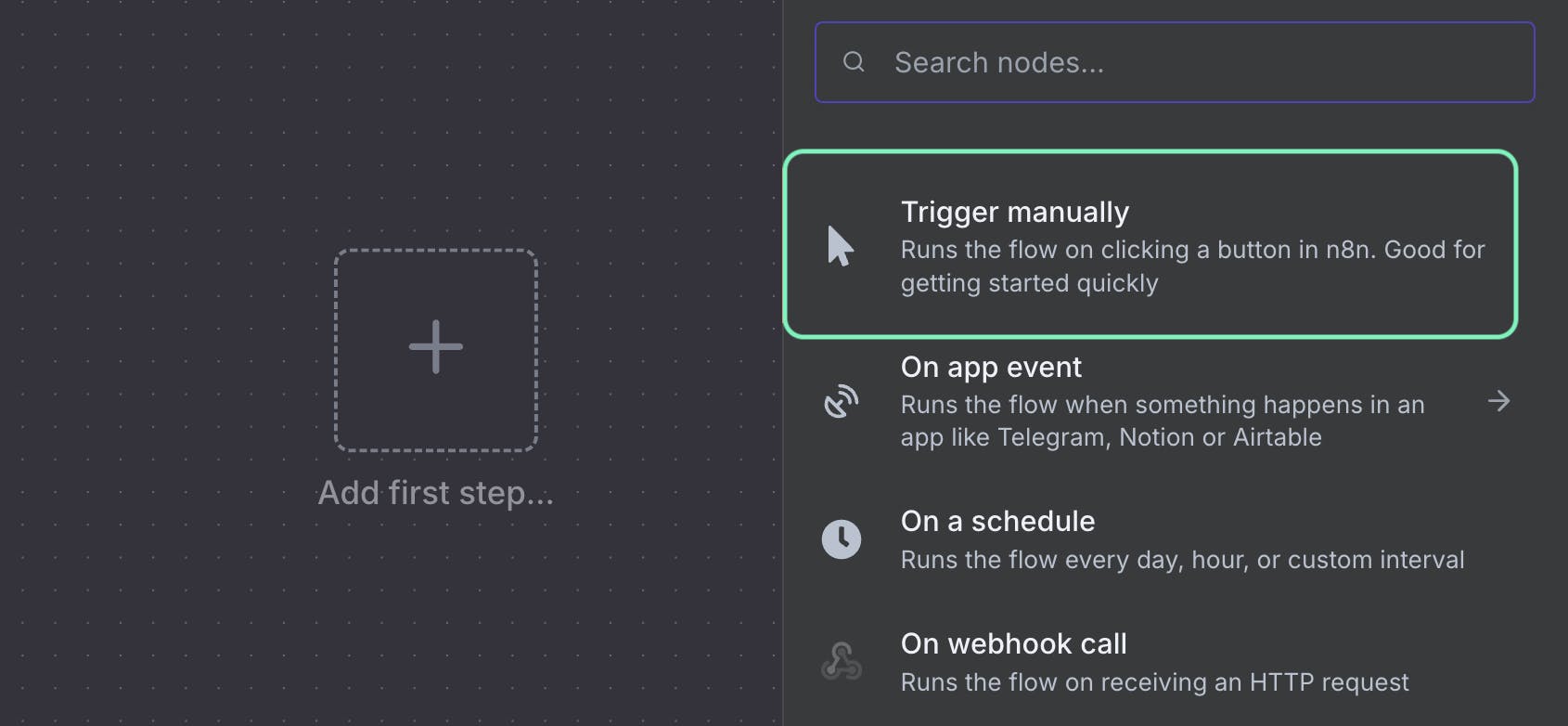

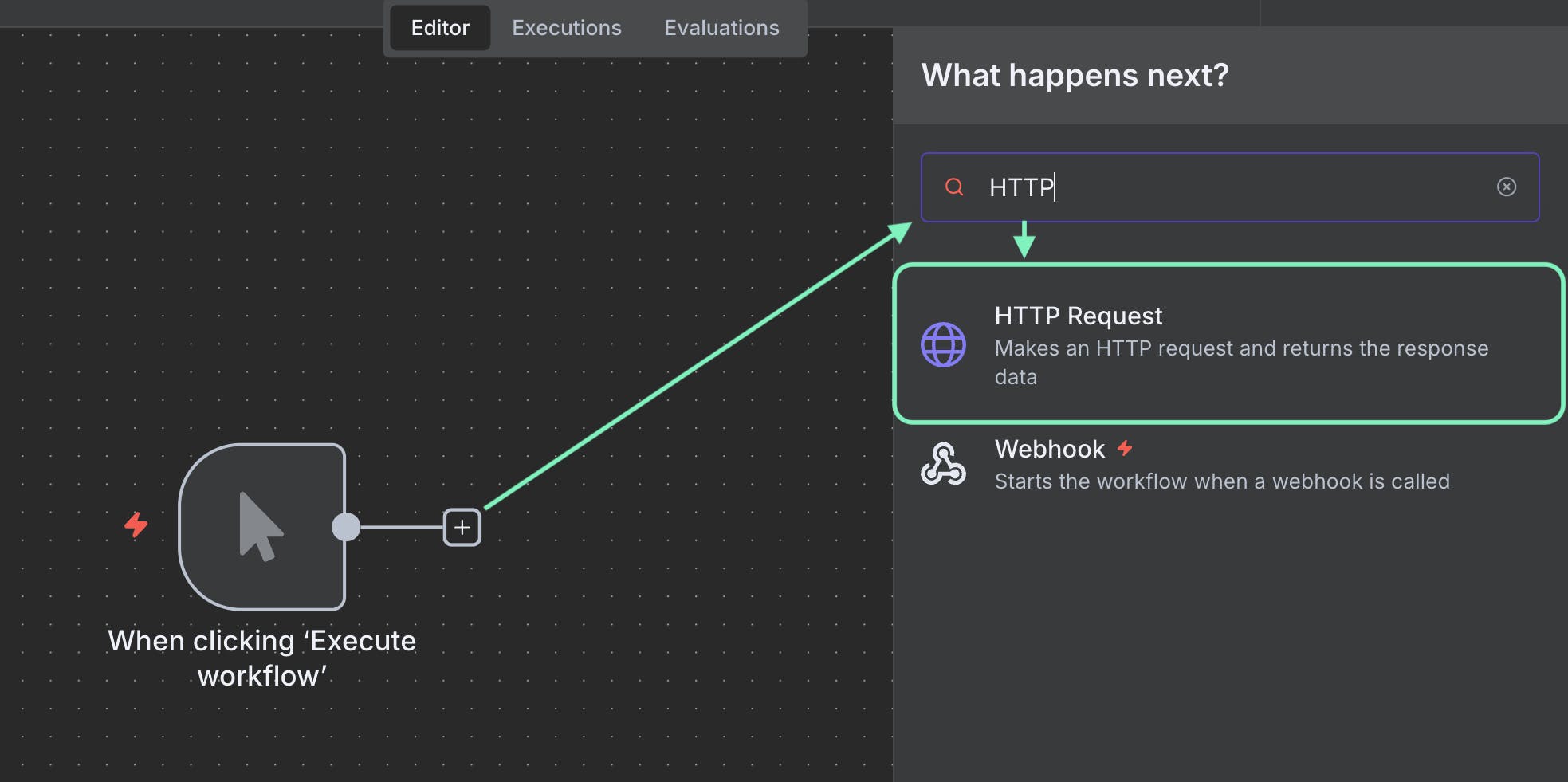

Step 1: Configure the HTTP Request Node with Proxy Support

Start by creating a new workflow in n8n. Add an HTTP Request node and configure it for proxy usage.

Basic Proxy Configuration

In your HTTP Request node, scroll down to the Options section and add the proxy URL:

http://username:password@proxy.roundproxies.com:8080

But here's where most tutorials end. Let's make this smarter.

Building a Dynamic Proxy Rotator

Instead of hardcoding one proxy, we'll create a system that manages multiple proxy endpoints. Add a Code node before your HTTP Request:

// Store your proxy configurations

const proxyPool = [

{

url: 'http://user1:pass1@proxy.roundproxies.com:8080',

region: 'us',

successRate: 1.0,

lastUsed: null,

failures: 0

},

{

url: 'http://user2:pass2@proxy.roundproxies.com:8081',

region: 'eu',

successRate: 1.0,

lastUsed: null,

failures: 0

},

{

url: 'http://user3:pass3@proxy.roundproxies.com:8082',

region: 'asia',

successRate: 1.0,

lastUsed: null,

failures: 0

}

];

// Load previous proxy stats from workflow static data

const staticData = $getWorkflowStaticData('global');

const proxyStats = staticData.proxyStats || {};

// Update pool with saved stats

proxyPool.forEach(proxy => {

if (proxyStats[proxy.url]) {

proxy.successRate = proxyStats[proxy.url].successRate;

proxy.failures = proxyStats[proxy.url].failures;

proxy.lastUsed = proxyStats[proxy.url].lastUsed;

}

});

Now, let's implement intelligent selection logic:

// Smart proxy selection based on success rate and timing

function selectProxy(pool, targetRegion = null) {

const now = Date.now();

const cooldownPeriod = 5000; // 5 seconds between uses

// Filter available proxies

let available = pool.filter(p => {

// Skip if recently used

if (p.lastUsed && (now - p.lastUsed) < cooldownPeriod) {

return false;

}

// Skip if too many failures

if (p.failures > 5) {

return false;

}

// Filter by region if specified

if (targetRegion && p.region !== targetRegion) {

return false;

}

return true;

});

// If no proxies available, reset and use all

if (available.length === 0) {

available = pool.filter(p => p.failures < 10);

}

// Weighted random selection based on success rate

const totalWeight = available.reduce((sum, p) => sum + p.successRate, 0);

let random = Math.random() * totalWeight;

for (const proxy of available) {

random -= proxy.successRate;

if (random <= 0) {

proxy.lastUsed = now;

return proxy;

}

}

// Fallback to first available

return available[0];

}

// Select the best proxy for this request

const selectedProxy = selectProxy(proxyPool, $json.targetRegion);

return {

proxy: selectedProxy.url,

proxyData: selectedProxy

};

Step 2: Implement Request Execution with Error Handling

Now we need to actually make the request and handle potential failures intelligently. Add another Code node after your HTTP Request:

// Get the response from the HTTP node

const response = $input.all()[0];

const proxyData = $node["Proxy Selector"].json.proxyData;

// Load static data

const staticData = $getWorkflowStaticData('global');

let proxyStats = staticData.proxyStats || {};

// Initialize stats for this proxy if needed

if (!proxyStats[proxyData.url]) {

proxyStats[proxyData.url] = {

totalRequests: 0,

successfulRequests: 0,

successRate: 1.0,

failures: 0,

lastUsed: null

};

}

// Check if request was successful

const isSuccess = response.statusCode >= 200 && response.statusCode < 300;

// Update proxy statistics

proxyStats[proxyData.url].totalRequests++;

if (isSuccess) {

proxyStats[proxyData.url].successfulRequests++;

proxyStats[proxyData.url].failures = 0; // Reset failure counter

} else {

proxyStats[proxyData.url].failures++;

}

// Calculate new success rate

proxyStats[proxyData.url].successRate =

proxyStats[proxyData.url].successfulRequests /

proxyStats[proxyData.url].totalRequests;

proxyStats[proxyData.url].lastUsed = Date.now();

// Save updated stats

$getWorkflowStaticData('global').proxyStats = proxyStats;

// Return the response with metadata

return {

success: isSuccess,

statusCode: response.statusCode,

data: response.body,

proxyUsed: proxyData.url,

proxyRegion: proxyData.region,

successRate: proxyStats[proxyData.url].successRate

};

Step 3: Add Intelligent Retry Logic

Failed requests shouldn't just fail silently. Let's add a retry mechanism that switches proxies on failure. Create a Loop node with the following configuration:

// Retry configuration

const maxRetries = 3;

const currentAttempt = $runIndex || 0;

// Check if we should retry

if (currentAttempt >= maxRetries) {

throw new Error('Max retries reached');

}

// Get the last response

const lastResponse = $node["Response Handler"].json;

// Continue loop if request failed

if (!lastResponse.success) {

// Wait before retrying (exponential backoff)

const waitTime = Math.pow(2, currentAttempt) * 1000;

await new Promise(resolve => setTimeout(resolve, waitTime));

return {

shouldRetry: true,

attempt: currentAttempt + 1,

lastError: lastResponse.statusCode

};

}

// Success - exit loop

return {

shouldRetry: false,

finalResponse: lastResponse

};

Step 4: Parse and Extract Data

Once we have a successful response, we need to extract the actual data. Add an HTML Extract node or a Code node for custom parsing:

// Parse HTML response

const cheerio = require('cheerio');

const html = $json.data;

const $ = cheerio.load(html);

// Example: Extract product data

const products = [];

$('.product-item').each((index, element) => {

const product = {

title: $(element).find('.product-title').text().trim(),

price: $(element).find('.price').text().trim(),

url: $(element).find('a').attr('href'),

image: $(element).find('img').attr('src'),

availability: $(element).find('.availability').text().trim(),

scraped_at: new Date().toISOString(),

proxy_region: $json.proxyRegion

};

products.push(product);

});

// Add metadata about the scraping session

return {

products: products,

metadata: {

total_products: products.length,

proxy_used: $json.proxyUsed,

success_rate: $json.successRate,

timestamp: new Date().toISOString()

}

};

Step 5: Build a Proxy Health Monitoring System

Here's where we go beyond basic scraping. Create a separate workflow that monitors your proxy health:

// Proxy health check workflow

const testUrls = [

'http://httpbin.org/ip',

'http://ipinfo.io/json',

'https://api.ipify.org?format=json'

];

const proxyPool = [

// Your proxy configurations

];

const healthResults = [];

for (const proxy of proxyPool) {

const proxyHealth = {

proxy: proxy.url,

region: proxy.region,

tests: [],

overallHealth: 'unknown'

};

for (const testUrl of testUrls) {

try {

const startTime = Date.now();

// Make test request through proxy

const response = await $http.request({

method: 'GET',

url: testUrl,

proxy: proxy.url,

timeout: 10000

});

const responseTime = Date.now() - startTime;

proxyHealth.tests.push({

url: testUrl,

success: true,

responseTime: responseTime,

statusCode: response.statusCode

});

} catch (error) {

proxyHealth.tests.push({

url: testUrl,

success: false,

error: error.message

});

}

}

// Calculate overall health score

const successRate = proxyHealth.tests.filter(t => t.success).length / proxyHealth.tests.length;

const avgResponseTime = proxyHealth.tests

.filter(t => t.success)

.reduce((sum, t) => sum + t.responseTime, 0) / proxyHealth.tests.filter(t => t.success).length;

proxyHealth.overallHealth = successRate === 1 ? 'healthy' :

successRate > 0.5 ? 'degraded' :

'unhealthy';

proxyHealth.successRate = successRate;

proxyHealth.avgResponseTime = avgResponseTime;

healthResults.push(proxyHealth);

}

// Store health results for use in main workflow

$getWorkflowStaticData('global').proxyHealth = healthResults;

return healthResults;

Step 6: Handle Rate Limiting and Throttling

Smart scrapers don't hammer servers. Add a rate limiter to your workflow:

// Rate limiting implementation

const rateLimits = {

'example.com': { requests: 10, window: 60000 }, // 10 req/min

'api.example.com': { requests: 100, window: 3600000 }, // 100 req/hour

'default': { requests: 30, window: 60000 } // 30 req/min default

};

function getRateLimiter(domain) {

const staticData = $getWorkflowStaticData('global');

const requestLog = staticData.requestLog || {};

if (!requestLog[domain]) {

requestLog[domain] = [];

}

const now = Date.now();

const limit = rateLimits[domain] || rateLimits.default;

// Clean old entries

requestLog[domain] = requestLog[domain].filter(

timestamp => now - timestamp < limit.window

);

// Check if we can make a request

if (requestLog[domain].length >= limit.requests) {

const oldestRequest = requestLog[domain][0];

const waitTime = limit.window - (now - oldestRequest);

return {

canProceed: false,

waitTime: waitTime,

currentRate: requestLog[domain].length

};

}

// Log this request

requestLog[domain].push(now);

$getWorkflowStaticData('global').requestLog = requestLog;

return {

canProceed: true,

currentRate: requestLog[domain].length,

limit: limit.requests

};

}

// Use in your workflow

const targetUrl = new URL($json.url);

const rateLimit = getRateLimiter(targetUrl.hostname);

if (!rateLimit.canProceed) {

// Wait before proceeding

await new Promise(resolve =>

setTimeout(resolve, rateLimit.waitTime)

);

}

Step 7: Implement Session Management for Complex Scraping

Some sites require maintaining sessions across multiple requests. Here's how to handle that with Roundproxies' sticky sessions:

// Session management for multi-step scraping

class ScrapingSession {

constructor(proxy, cookieJar = {}) {

this.proxy = proxy;

this.cookies = cookieJar;

this.sessionId = this.generateSessionId();

}

generateSessionId() {

return 'session_' + Date.now() + '_' + Math.random().toString(36);

}

async request(url, options = {}) {

const requestOptions = {

...options,

proxy: this.proxy,

headers: {

...options.headers,

'Cookie': this.formatCookies(),

'User-Agent': this.getUserAgent()

}

};

const response = await $http.request(requestOptions);

// Update cookies from response

this.updateCookies(response.headers);

return response;

}

formatCookies() {

return Object.entries(this.cookies)

.map(([key, value]) => `${key}=${value}`)

.join('; ');

}

updateCookies(headers) {

const setCookieHeader = headers['set-cookie'];

if (setCookieHeader) {

// Parse and store new cookies

const cookies = Array.isArray(setCookieHeader) ?

setCookieHeader : [setCookieHeader];

cookies.forEach(cookie => {

const [nameValue] = cookie.split(';');

const [name, value] = nameValue.split('=');

this.cookies[name.trim()] = value.trim();

});

}

}

getUserAgent() {

const userAgents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36'

];

// Use consistent user agent per session

if (!this.userAgent) {

this.userAgent = userAgents[Math.floor(Math.random() * userAgents.length)];

}

return this.userAgent;

}

}

// Usage example: Login and scrape protected content

const session = new ScrapingSession($json.proxy);

// Step 1: Login

const loginResponse = await session.request('https://example.com/login', {

method: 'POST',

body: JSON.stringify({

username: 'user',

password: 'pass'

})

});

// Step 2: Access protected page using same session

const protectedContent = await session.request('https://example.com/dashboard');

return {

sessionId: session.sessionId,

content: protectedContent.body,

cookies: session.cookies

};

Advanced Techniques: Bypassing Anti-Bot Measures

While n8n's HTTP Request node handles basic requests well, sophisticated anti-bot systems require additional strategies:

Browser Fingerprint Randomization

function generateFingerprint() {

const fingerprint = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': randomChoice(['en-US,en;q=0.9', 'en-GB,en;q=0.9', 'de-DE,de;q=0.9']),

'Accept-Encoding': 'gzip, deflate, br',

'DNT': Math.random() > 0.5 ? '1' : '0',

'Connection': 'keep-alive',

'Upgrade-Insecure-Requests': '1'

};

return fingerprint;

}

function randomChoice(array) {

return array[Math.floor(Math.random() * array.length)];

}

// Apply to your request

const headers = {

...generateFingerprint(),

'User-Agent': generateUserAgent()

};

Request Pattern Randomization

// Add random delays between requests

function humanizeRequestTiming() {

const baseDelay = 2000; // 2 seconds

const randomDelay = Math.random() * 3000; // 0-3 seconds random

const totalDelay = baseDelay + randomDelay;

// Sometimes add longer "reading" pauses

if (Math.random() < 0.1) { // 10% chance

return totalDelay + 5000 + Math.random() * 10000; // Extra 5-15 seconds

}

return totalDelay;

}

// Randomize request order

function shuffleUrls(urls) {

const shuffled = [...urls];

for (let i = shuffled.length - 1; i > 0; i--) {

const j = Math.floor(Math.random() * (i + 1));

[shuffled[i], shuffled[j]] = [shuffled[j], shuffled[i]];

}

return shuffled;

}

Monitoring and Alerting

Set up a monitoring dashboard to track your scraping performance:

// Performance monitoring

const metrics = {

totalRequests: 0,

successfulRequests: 0,

failedRequests: 0,

averageResponseTime: 0,

proxyPerformance: {},

errors: []

};

// Track each request

function trackRequest(request, response, proxy) {

metrics.totalRequests++;

if (response.success) {

metrics.successfulRequests++;

} else {

metrics.failedRequests++;

metrics.errors.push({

url: request.url,

error: response.error,

proxy: proxy,

timestamp: new Date().toISOString()

});

}

// Update proxy-specific metrics

if (!metrics.proxyPerformance[proxy]) {

metrics.proxyPerformance[proxy] = {

requests: 0,

successes: 0,

failures: 0

};

}

metrics.proxyPerformance[proxy].requests++;

if (response.success) {

metrics.proxyPerformance[proxy].successes++;

} else {

metrics.proxyPerformance[proxy].failures++;

}

// Alert if success rate drops below threshold

const successRate = metrics.successfulRequests / metrics.totalRequests;

if (successRate < 0.8 && metrics.totalRequests > 100) {

sendAlert('Success rate below 80%', metrics);

}

}

function sendAlert(message, data) {

// Send to Slack, email, or other notification service

// This would connect to n8n's Slack or Email node

return {

alert: message,

metrics: data,

timestamp: new Date().toISOString()

};

}

Common Pitfalls and Solutions

Issue: Proxies Getting Blocked Despite Rotation

Solution: Implement request fingerprinting and pattern analysis:

// Detect blocking patterns

function analyzeBlockingPatterns(failedRequests) {

const patterns = {

timeBasedBlocks: [],

urlPatternBlocks: [],

proxySpecificBlocks: []

};

// Group failures by hour

const hourlyFailures = {};

failedRequests.forEach(req => {

const hour = new Date(req.timestamp).getHours();

hourlyFailures[hour] = (hourlyFailures[hour] || 0) + 1;

});

// Find peak failure hours

Object.entries(hourlyFailures).forEach(([hour, count]) => {

if (count > failedRequests.length * 0.2) { // More than 20% failures in this hour

patterns.timeBasedBlocks.push({

hour: parseInt(hour),

failureRate: count / failedRequests.length

});

}

});

return patterns;

}

Issue: Memory Leaks in Long-Running Workflows

Solution: Implement proper cleanup and memory management:

// Clean up old data periodically

function cleanupStaticData() {

const staticData = $getWorkflowStaticData('global');

const now = Date.now();

const retentionPeriod = 24 * 60 * 60 * 1000; // 24 hours

// Clean old request logs

if (staticData.requestLog) {

Object.keys(staticData.requestLog).forEach(domain => {

staticData.requestLog[domain] = staticData.requestLog[domain]

.filter(timestamp => now - timestamp < retentionPeriod);

});

}

// Clean old proxy stats

if (staticData.proxyStats) {

Object.keys(staticData.proxyStats).forEach(proxy => {

if (now - staticData.proxyStats[proxy].lastUsed > retentionPeriod * 7) {

delete staticData.proxyStats[proxy];

}

});

}

$getWorkflowStaticData('global').lastCleanup = now;

}

Performance Optimization Tips

1. Batch Processing for Large-Scale Scraping

Instead of processing URLs one by one, batch them for parallel execution:

// Batch processor with concurrency control

async function processBatch(urls, batchSize = 5) {

const results = [];

for (let i = 0; i < urls.length; i += batchSize) {

const batch = urls.slice(i, i + batchSize);

// Process batch in parallel

const batchPromises = batch.map(async (url) => {

const proxy = selectProxy(proxyPool);

return scrapeUrl(url, proxy);

});

const batchResults = await Promise.all(batchPromises);

results.push(...batchResults);

// Add delay between batches

await new Promise(resolve =>

setTimeout(resolve, humanizeRequestTiming())

);

}

return results;

}

2. Caching to Reduce Redundant Requests

// Simple in-memory cache with TTL

class RequestCache {

constructor(ttl = 3600000) { // 1 hour default

this.cache = {};

this.ttl = ttl;

}

set(key, value) {

this.cache[key] = {

value: value,

expiry: Date.now() + this.ttl

};

}

get(key) {

const cached = this.cache[key];

if (!cached) return null;

if (Date.now() > cached.expiry) {

delete this.cache[key];

return null;

}

return cached.value;

}

clear() {

this.cache = {};

}

}

// Use cache in your scraping workflow

const cache = new RequestCache();

const cacheKey = `${url}_${JSON.stringify(params)}`;

const cached = cache.get(cacheKey);

if (cached) {

return cached;

}

const result = await scrapeUrl(url);

cache.set(cacheKey, result);

return result;

Conclusion

This setup transforms n8n from a simple automation tool into a sophisticated web scraping platform. By combining n8n's visual workflow capabilities with Roundproxies' robust proxy infrastructure, you get:

- Resilient scraping that adapts to failures

- Smart proxy rotation based on performance metrics

- Built-in monitoring to catch issues early

- Session management for complex multi-step scraping

- Rate limiting to respect target servers

The beauty of this approach? You're not locked into rigid scraping frameworks. Every component is modular, testable, and adjustable through n8n's interface. When requirements change, you tweak nodes, not rewrite code.

Start with the basic proxy rotation setup, then layer on the advanced features as your scraping needs grow. The monitoring and health check systems might seem like overkill for small projects, but they're lifesavers when you're scraping thousands of pages daily.

Remember: successful web scraping isn't about brute force - it's about being smart, respectful, and adaptive. This n8n + Roundproxies combination gives you all three.

![IPRoyal vs Oxylabs: Which provider is better? [2026]](https://cdn.roundproxies.com/blog-images/2026/02/iproyal-vs-oxylabs-1.png)